Kazushi Yamamoto, Sehwa Chun, Yuki Sekimori, Chihaya Kawamra

Introduction

On August 28 and 29, students and enthusiasts gathered online to take part in the Underwater Robot Convention in JAMSTEC 2021. The event, hosted by NPO Japan Underwater Robot Network, serves as a forum for participants to exchange technical ideas and build networks through presentations and the competition of underwater robots. The overview of this year’s event can be found in the convention’s website [1] (in Japanese), and the events in previous years are described in [2], [3], [4], and [5].

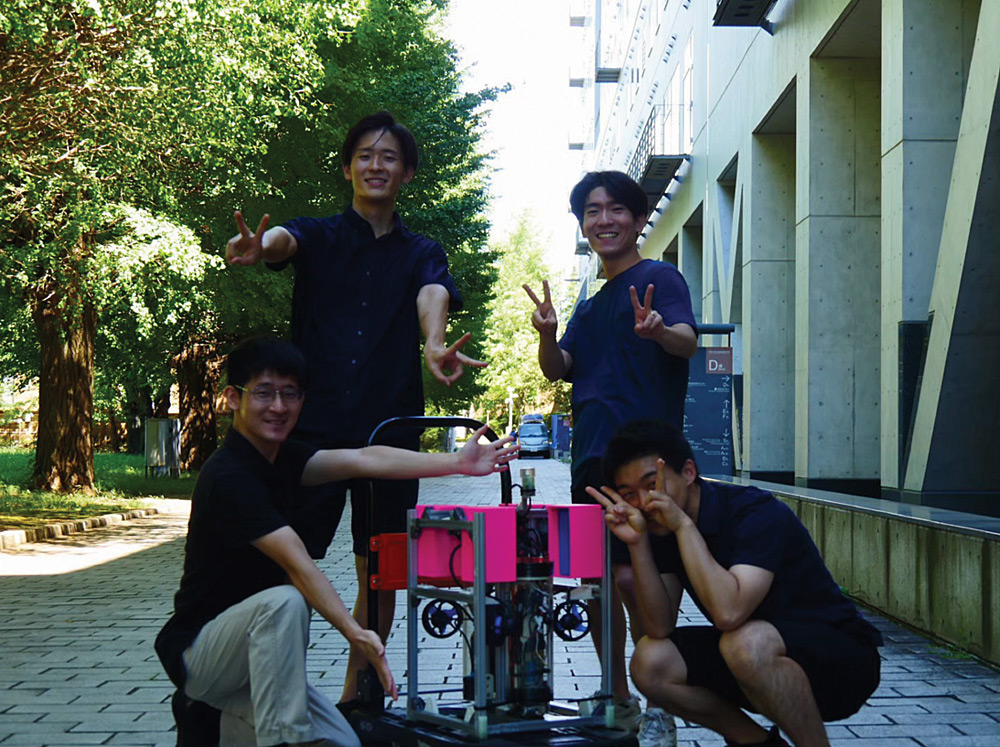

We, the authors, are masters course students in Prof. Maki’s laboratory at the University of Tokyo. We participated in the AI Challenge division as team “UT Maki Lab.” [Figure 1]. Our primary aim was to accumulate basic knowledge and skills related to underwater robotics in preparation for future research work. We also learned how to collaborate as a team, which is a crucial aspect in developing and operating underwater robots.

Rules

In the AI Challenge division, participants proposed a mission that combined underwater robots and Artificial Intelligence (AI). The event organizers called for “exciting” robots that are “capable of executing AI missions underwater.” Each team uploaded a poster and two videos of five minutes each, one to present their robot and AI mission, and another to demonstrate its capability. The judges evaluated the overall performance based on the four criteria listed in table 1.

Table 1. Criteria for AI Challenge division

| Criteria | Points | Description |

| Presentation | 20 | The overall quality of presentation, videos and poster. |

| Idea | 30 | The novelty of the AI mission. |

| Technical contents | 30 | The quality of hardware, software, algorithms, etc. |

| Capability | 20 | The performance of the robot. Maximum points for full autonomy (without tether cables). |

AI Mission

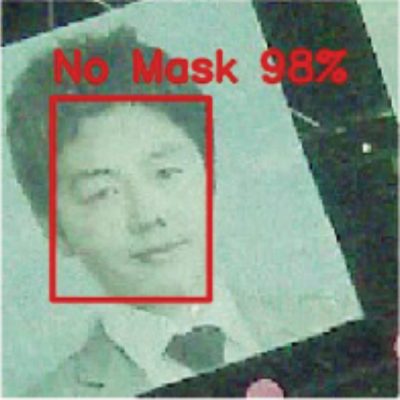

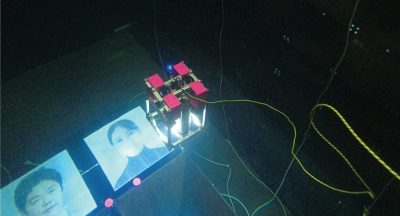

Given the freedom to choose our own AI mission, we wanted to set an exciting and technically interesting topic. Currently, the world is under the threat of COVID-19, so we thought that a mission related to it would be attractive. Based on our slogan, “No exception to prevent infection,” our mission was to identify the faces without a mask with a computer vision (CV) algorithm and cover the faces with a mask with artificial intelligence using an underwater robot. We installed three portraits and a mask station in the 8m on a side cubic water tank in the Institute of Industrial Science, the University of Tokyo [Figure 2]. One of the portraits wore a mask, and the other two did not. The funnel-shaped mask station aligns the robot, such that it can dock regardless of a small position error.

Our AI mission was to cover all the faces not wearing a mask. The robot searches and detects a maskless face within the set of submerged photograph portraits and delivers a mask from the docking station. This mission was divided into tasks: detect, catch and delivery. During the detect task, the robot hovers and searches for the portraits using the CV algorithm with the AI. If the probability of the portrait wearing the mask is low, the robot decides to transition to the next task. During the catch task, the robot aims to dock on the mask station and fetch the metallic mask with the electromagnet. During the delivery task, it delivers the mask to the portrait identified in the detect task. After the robot puts the mask on the target, it transitions back to the detect task to search for the remaining maskless portrait. If everyone is wearing a mask, the robot completes the mission, and it ascends to the surface.

AUV

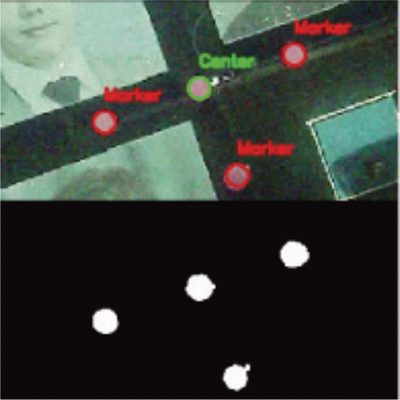

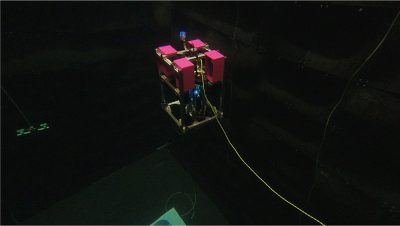

To demonstrate our mission, we developed a hovering type autonomous underwater vehicle named “AI and ROS Integrated vEhicLe” (ARIEL) [Figure 3, Figure 4]. ARIEL estimates its depth using a depth sensor that measures water pressure. It estimates its position on the horizontal plane by perceiving the markers attached to the station using a camera [Figure 5]. It equips an electromagnet on the bottom to transport the masks.

ARIEL controls itself using the Raspberry Pi microcomputer and Teensy drivers. We implemented the software applications of ARIEL using the ROS, an open-source library and tools commonly used to develop robotics applications. The ROS is a powerful tool for parallel distributed processes such as sensor and actuator signal handling. The convolutional neural network of ARIEL can estimate the position of the faces on the bottom of the water tank. Furthermore, it can calculate the probability of whether the person in the portrait is wearing a mask or not. We created the AI software based on the Tensorflow library, and we used the MobilenetV2 neural network [6] for mask recognition [Figure 6]. This software program runs swiftly and accurately on the Raspberry Pi despite its limited computational power. ARIEL decides its action based on its state and the position of the photograph. It completes the mission accurately by transitioning to an appropriate mode depending on the situation.

It was June, 2021, when we started working in earnest. With less than three months left until the date of submission on 20 August , we needed to progress efficiently. We divided the ARIEL’s development process into three subprojects: hardware, system software, and AI algorithm, and we divided and conquered the subprojects. Each process was carried out in parallel by appointing a person in charge of each part. While accomplishing each part of the task, it was important to collaborate and exchange opinions as well. In July, the hardware was completed to some extent, and it was possible to operate ARIEL with manual control. In the beginning of August, we combined the system and AI that were in progress at the same time with the hardware, and the prototype of ARIEL was completed.

From August, we tested ARIEL in the water tank and made adjustments. We adjusted the center of buoyancy and the center of gravity, tuned the PID parameters, and set the parameters of the AI detector. Before the submission date, ARIEL was completed as an autonomous underwater robot and capable of judging whether a mask is on and transporting the mask by itself [Figure 7, Figure 8]. ARIEL showed 53% mission success rate by succeeding 9 times out of 17 trials.

On the first day of the event, each team shared a presentation video and answered questions online. On the second day, each team shared a demonstration video likewise, and we had the awarding ceremony in the end. Our videos can be found in our laboratory YouTube channel [7], and our final score is shown in Table 2. With an outstanding score of 91 points, we came in first place out of three teams.

Looking back, the Underwater Robotics Convention made us realize the difficulty of developing an underwater robot, the importance of collaboration, and connected us with people in the related industry. We have no doubt that the experiences gained throughout the intense three months of development, and the event, will be of great strength for us in future research work.

Table 2. Final scores for “Team UT Maki Lab.”

| Presentation | Idea | Technical Contents | Capability | Total |

| 18 | 23 | 29 | 20 | 91 |

After the event

Shortly after the Underwater Robotics Convention 2021 in JAMSTEC, “UT Maki Lab.” and a team from Fukushima Prefectural Taira Technical High School were invited to demonstrate our robots in Roboichi [8], an event that took place in Fukushima Robot Test field along with the World Robot Summit from October 8-10. ARIEL operated for over 10 hours under manual control, and did not experience any technical issues. It demonstrated the ability to detect maskless, and intervene with detected targets by placing the metallic mask on and off the portrait.

Comments

Finally, we will close this article with a short comment from each member.

Kazushi Yamamoto: Making ARIEL with my mates was great fun. It was an honor (and a relief) to come in first place.

Sehwa Chun: It’s really cool to work with people with high enthusiasm, diverse talents and the same goals. It couldn’t be greater if it was about underwater robots!

Yuki Sekimori: I would like to appreciate all my teammates for the outstanding enthusiasm, collaboration, and performance throughout the project. I would also like to appreciate the members of the Maki Laboratory for the pieces of advice and encouragement. Finally, I would like to thank all participants, event organizers and sponsors for providing us with the wonderful experience.

Chihaya Kawamura: This project was not only challenging but also exciting for me because it was my first time facing robot development everyday. The most striking thing I learned through this project is that haste makes waste. We found out that when ARIEL goes wrong for unknown reasons, the fastest way to fix it is to go through all the possible reasons one by one.

Acknowledgement

The Underwater Robot Convention in JAMSTEC in 2021 was supported by The Japan Society of Naval Architects and Ocean Engineers, IEEE/OES Japan Chapter, MTS Japan Section, Techno-Ocean Network, Kanagawa Prefecture, Yokosuka City, Tokyo University of Marine Sciences and Technologies, Japan Agency for Marine-Earth Science and Technology (JAMSTEC), Center for Integrated Underwater Observation Technology at Institute of Industrial Science, the University of Tokyo, The Nippon Foundation, Misago Co., Ltd., FullDepth Co., Ltd., Aqua Modelers Meeting, and Matsuyama Industry Co., Ltd. We would like to express our sincere appreciation to the sponsors for their strong support and cooperation in realizing this event.

References

[1] Underwater Robot Convention in JAMSTEC 2021 (Japanese). http://jam21.underwaterrobonet.org/

[2] Y. Sekimori, T. Maki, Underwater Robot Convention in JAMSTEC 2020 – All Hands on Deck! Online!!, IEEE OES Beacon Newsletter, 10(1), 39-42 (2021.3)

[3] K. Fujita, Y. Hamamatsu, H. Yatagai, Reflection for Singapore Autonomous Underwater Vehicle Challenge – the Comparison Between SAUVC and a Competition Held in Japan, IEEE OES Beacon Newsletter, 8(2), 64-67 (2019.6)

[4] H. Yamagata, T. Maki, Underwater Robot Convention in JAMSTEC 2018 – from an Educational Perspective, IEEE OES Beacon Newsletter, 7(4), 68-72 (2018.12)

[5] H. Horimoto, T. Nishimura, T. Matsuda, AUV “Minty Roll” and results of “Underwater Robot Convention 2017 in JAMSTEC,” IEEE OES Beacon Newsletter, 6(4), 77-79 (2017.12)

[6] M. Sandler, A. Howard, M. Zhu, A. Zhmoginov and L. Chen, ”MobileNetV2: Inverted Residuals and Linear Bottlenecks,” 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp.4510-4520, DOI: 10.1109/CVPR.2018.00474.

[7] Maki Laboratory Official YouTube channel. https://www.youtube.com/user/makilabo

[8] Roboichi (Japanese). https://biz.nikkan.co.jp/roboichi/

Dr. James V. Candy is the Chief Scientist for Engineering and former Director of the Center for Advanced Signal & Image Sciences at the University of California, Lawrence Livermore National Laboratory. Dr. Candy received a commission in the USAF in 1967 and was a Systems Engineer/Test Director from 1967 to 1971. He has been a Researcher at the Lawrence Livermore National Laboratory since 1976 holding various positions including that of Project Engineer for Signal Processing and Thrust Area Leader for Signal and Control Engineering. Educationally, he received his B.S.E.E. degree from the University of Cincinnati and his M.S.E. and Ph.D. degrees in Electrical Engineering from the University of Florida, Gainesville. He is a registered Control System Engineer in the state of California. He has been an Adjunct Professor at San Francisco State University, University of Santa Clara, and UC Berkeley, Extension teaching graduate courses in signal and image processing. He is an Adjunct Full-Professor at the University of California, Santa Barbara. Dr. Candy is a Fellow of the IEEE and a Fellow of the Acoustical Society of America (ASA) and elected as a Life Member (Fellow) at the University of Cambridge (Clare Hall College). He is a member of Eta Kappa Nu and Phi Kappa Phi honorary societies. He was elected as a Distinguished Alumnus by the University of Cincinnati. Dr. Candy received the IEEE Distinguished Technical Achievement Award for the “development of model-based signal processing in ocean acoustics.” Dr. Candy was selected as a IEEE Distinguished Lecturer for oceanic signal processing as well as presenting an IEEE tutorial on advanced signal processing available through their video website courses. He was nominated for the prestigious Edward Teller Fellowship at Lawrence Livermore National Laboratory. Dr. Candy was awarded the Interdisciplinary Helmholtz-Rayleigh Silver Medal in Signal Processing/Underwater Acoustics by the Acoustical Society of America for his technical contributions. He has published over 225 journal articles, book chapters, and technical reports as well as written three texts in signal processing, “Signal Processing: the Model-Based Approach,” (McGraw-Hill, 1986), “Signal Processing: the Modern Approach,” (McGraw-Hill, 1988), “Model-Based Signal Processing,” (Wiley/IEEE Press, 2006) and “Bayesian Signal Processing: Classical, Modern and Particle Filtering” (Wiley/IEEE Press, 2009). He was the General Chairman of the inaugural 2006 IEEE Nonlinear Statistical Signal Processing Workshop held at the Corpus Christi College, University of Cambridge. He has presented a variety of short courses and tutorials sponsored by the IEEE and ASA in Applied Signal Processing, Spectral Estimation, Advanced Digital Signal Processing, Applied Model-Based Signal Processing, Applied Acoustical Signal Processing, Model-Based Ocean Acoustic Signal Processing and Bayesian Signal Processing for IEEE Oceanic Engineering Society/ASA. He has also presented short courses in Applied Model-Based Signal Processing for the SPIE Optical Society. He is currently the IEEE Chair of the Technical Committee on “Sonar Signal and Image Processing” and was the Chair of the ASA Technical Committee on “Signal Processing in Acoustics” as well as being an Associate Editor for Signal Processing of ASA (on-line JASAXL). He was recently nominated for the Vice Presidency of the ASA and elected as a member of the Administrative Committee of IEEE OES. His research interests include Bayesian estimation, identification, spatial estimation, signal and image processing, array signal processing, nonlinear signal processing, tomography, sonar/radar processing and biomedical applications.

Dr. James V. Candy is the Chief Scientist for Engineering and former Director of the Center for Advanced Signal & Image Sciences at the University of California, Lawrence Livermore National Laboratory. Dr. Candy received a commission in the USAF in 1967 and was a Systems Engineer/Test Director from 1967 to 1971. He has been a Researcher at the Lawrence Livermore National Laboratory since 1976 holding various positions including that of Project Engineer for Signal Processing and Thrust Area Leader for Signal and Control Engineering. Educationally, he received his B.S.E.E. degree from the University of Cincinnati and his M.S.E. and Ph.D. degrees in Electrical Engineering from the University of Florida, Gainesville. He is a registered Control System Engineer in the state of California. He has been an Adjunct Professor at San Francisco State University, University of Santa Clara, and UC Berkeley, Extension teaching graduate courses in signal and image processing. He is an Adjunct Full-Professor at the University of California, Santa Barbara. Dr. Candy is a Fellow of the IEEE and a Fellow of the Acoustical Society of America (ASA) and elected as a Life Member (Fellow) at the University of Cambridge (Clare Hall College). He is a member of Eta Kappa Nu and Phi Kappa Phi honorary societies. He was elected as a Distinguished Alumnus by the University of Cincinnati. Dr. Candy received the IEEE Distinguished Technical Achievement Award for the “development of model-based signal processing in ocean acoustics.” Dr. Candy was selected as a IEEE Distinguished Lecturer for oceanic signal processing as well as presenting an IEEE tutorial on advanced signal processing available through their video website courses. He was nominated for the prestigious Edward Teller Fellowship at Lawrence Livermore National Laboratory. Dr. Candy was awarded the Interdisciplinary Helmholtz-Rayleigh Silver Medal in Signal Processing/Underwater Acoustics by the Acoustical Society of America for his technical contributions. He has published over 225 journal articles, book chapters, and technical reports as well as written three texts in signal processing, “Signal Processing: the Model-Based Approach,” (McGraw-Hill, 1986), “Signal Processing: the Modern Approach,” (McGraw-Hill, 1988), “Model-Based Signal Processing,” (Wiley/IEEE Press, 2006) and “Bayesian Signal Processing: Classical, Modern and Particle Filtering” (Wiley/IEEE Press, 2009). He was the General Chairman of the inaugural 2006 IEEE Nonlinear Statistical Signal Processing Workshop held at the Corpus Christi College, University of Cambridge. He has presented a variety of short courses and tutorials sponsored by the IEEE and ASA in Applied Signal Processing, Spectral Estimation, Advanced Digital Signal Processing, Applied Model-Based Signal Processing, Applied Acoustical Signal Processing, Model-Based Ocean Acoustic Signal Processing and Bayesian Signal Processing for IEEE Oceanic Engineering Society/ASA. He has also presented short courses in Applied Model-Based Signal Processing for the SPIE Optical Society. He is currently the IEEE Chair of the Technical Committee on “Sonar Signal and Image Processing” and was the Chair of the ASA Technical Committee on “Signal Processing in Acoustics” as well as being an Associate Editor for Signal Processing of ASA (on-line JASAXL). He was recently nominated for the Vice Presidency of the ASA and elected as a member of the Administrative Committee of IEEE OES. His research interests include Bayesian estimation, identification, spatial estimation, signal and image processing, array signal processing, nonlinear signal processing, tomography, sonar/radar processing and biomedical applications. Kenneth Foote is a Senior Scientist at the Woods Hole Oceanographic Institution. He received a B.S. in Electrical Engineering from The George Washington University in 1968, and a Ph.D. in Physics from Brown University in 1973. He was an engineer at Raytheon Company, 1968-1974; postdoctoral scholar at Loughborough University of Technology, 1974-1975; research fellow and substitute lecturer at the University of Bergen, 1975-1981. He began working at the Institute of Marine Research, Bergen, in 1979; joined the Woods Hole Oceanographic Institution in 1999. His general area of expertise is in underwater sound scattering, with applications to the quantification of fish, other aquatic organisms, and physical scatterers in the water column and on the seafloor. In developing and transitioning acoustic methods and instruments to operations at sea, he has worked from 77°N to 55°S.

Kenneth Foote is a Senior Scientist at the Woods Hole Oceanographic Institution. He received a B.S. in Electrical Engineering from The George Washington University in 1968, and a Ph.D. in Physics from Brown University in 1973. He was an engineer at Raytheon Company, 1968-1974; postdoctoral scholar at Loughborough University of Technology, 1974-1975; research fellow and substitute lecturer at the University of Bergen, 1975-1981. He began working at the Institute of Marine Research, Bergen, in 1979; joined the Woods Hole Oceanographic Institution in 1999. His general area of expertise is in underwater sound scattering, with applications to the quantification of fish, other aquatic organisms, and physical scatterers in the water column and on the seafloor. In developing and transitioning acoustic methods and instruments to operations at sea, he has worked from 77°N to 55°S. René Garello, professor at Télécom Bretagne, Fellow IEEE, co-leader of the TOMS (Traitements, Observations et Méthodes Statistiques) research team, in Pôle CID of the UMR CNRS 3192 Lab-STICC.

René Garello, professor at Télécom Bretagne, Fellow IEEE, co-leader of the TOMS (Traitements, Observations et Méthodes Statistiques) research team, in Pôle CID of the UMR CNRS 3192 Lab-STICC. Professor Mal Heron is Adjunct Professor in the Marine Geophysical Laboratory at James Cook University in Townsville, Australia, and is CEO of Portmap Remote Ocean Sensing Pty Ltd. His PhD work in Auckland, New Zealand, was on radio-wave probing of the ionosphere, and that is reflected in his early ionospheric papers. He changed research fields to the scattering of HF radio waves from the ocean surface during the 1980s. Through the 1990s his research has broadened into oceanographic phenomena which can be studied by remote sensing, including HF radar and salinity mapping from airborne microwave radiometers . Throughout, there have been one-off papers where he has been involved in solving a problem in a cognate area like medical physics, and paleobiogeography. Occasionally, he has diverted into side-tracks like a burst of papers on the effect of bushfires on radio communications. His present project of the Australian Coastal Ocean Radar Network (ACORN) is about the development of new processing methods and applications of HF radar data to address oceanography problems. He is currently promoting the use of high resolution VHF ocean radars, based on the PortMap high resolution radar.

Professor Mal Heron is Adjunct Professor in the Marine Geophysical Laboratory at James Cook University in Townsville, Australia, and is CEO of Portmap Remote Ocean Sensing Pty Ltd. His PhD work in Auckland, New Zealand, was on radio-wave probing of the ionosphere, and that is reflected in his early ionospheric papers. He changed research fields to the scattering of HF radio waves from the ocean surface during the 1980s. Through the 1990s his research has broadened into oceanographic phenomena which can be studied by remote sensing, including HF radar and salinity mapping from airborne microwave radiometers . Throughout, there have been one-off papers where he has been involved in solving a problem in a cognate area like medical physics, and paleobiogeography. Occasionally, he has diverted into side-tracks like a burst of papers on the effect of bushfires on radio communications. His present project of the Australian Coastal Ocean Radar Network (ACORN) is about the development of new processing methods and applications of HF radar data to address oceanography problems. He is currently promoting the use of high resolution VHF ocean radars, based on the PortMap high resolution radar. Hanu Singh graduated B.S. ECE and Computer Science (1989) from George Mason University and Ph.D. (1995) from MIT/Woods Hole.He led the development and commercialization of the Seabed AUV, nine of which are in operation at other universities and government laboratories around the world. He was technical lead for development and operations for Polar AUVs (Jaguar and Puma) and towed vehicles(Camper and Seasled), and the development and commercialization of the Jetyak ASVs, 18 of which are currently in use. He was involved in the development of UAS for polar and oceanographic applications, and high resolution multi-sensor acoustic and optical mapping with underwater vehicles on over 55 oceanographic cruises in support of physical oceanography, marine archaeology, biology, fisheries, coral reef studies, geology and geophysics and sea-ice studies. He is an accomplished Research Student advisor and has made strong collaborations across the US (including at MIT, SIO, Stanford, Columbia LDEO) and internationally including in the UK, Australia, Canada, Korea, Taiwan, China, Japan, India, Sweden and Norway. Hanu Singh is currently Chair of the IEEE Ocean Engineering Technology Committee on Autonomous Marine Systems with responsibilities that include organizing the biennial IEEE AUV Conference, 2008 onwards. Associate Editor, IEEE Journal of Oceanic Engineering, 2007-2011. Associate editor, Journal of Field Robotics 2012 onwards.

Hanu Singh graduated B.S. ECE and Computer Science (1989) from George Mason University and Ph.D. (1995) from MIT/Woods Hole.He led the development and commercialization of the Seabed AUV, nine of which are in operation at other universities and government laboratories around the world. He was technical lead for development and operations for Polar AUVs (Jaguar and Puma) and towed vehicles(Camper and Seasled), and the development and commercialization of the Jetyak ASVs, 18 of which are currently in use. He was involved in the development of UAS for polar and oceanographic applications, and high resolution multi-sensor acoustic and optical mapping with underwater vehicles on over 55 oceanographic cruises in support of physical oceanography, marine archaeology, biology, fisheries, coral reef studies, geology and geophysics and sea-ice studies. He is an accomplished Research Student advisor and has made strong collaborations across the US (including at MIT, SIO, Stanford, Columbia LDEO) and internationally including in the UK, Australia, Canada, Korea, Taiwan, China, Japan, India, Sweden and Norway. Hanu Singh is currently Chair of the IEEE Ocean Engineering Technology Committee on Autonomous Marine Systems with responsibilities that include organizing the biennial IEEE AUV Conference, 2008 onwards. Associate Editor, IEEE Journal of Oceanic Engineering, 2007-2011. Associate editor, Journal of Field Robotics 2012 onwards. Milica Stojanovic graduated from the University of Belgrade, Serbia, in 1988, and received the M.S. and Ph.D. degrees in electrical engineering from Northeastern University in Boston, in 1991 and 1993. She was a Principal Scientist at the Massachusetts Institute of Technology, and in 2008 joined Northeastern University, where she is currently a Professor of electrical and computer engineering. She is also a Guest Investigator at the Woods Hole Oceanographic Institution. Milica’s research interests include digital communications theory, statistical signal processing and wireless networks, and their applications to underwater acoustic systems. She has made pioneering contributions to underwater acoustic communications, and her work has been widely cited. She is a Fellow of the IEEE, and serves as an Associate Editor for its Journal of Oceanic Engineering (and in the past for Transactions on Signal Processing and Transactions on Vehicular Technology). She also serves on the Advisory Board of the IEEE Communication Letters, and chairs the IEEE Ocean Engineering Society’s Technical Committee for Underwater Communication, Navigation and Positioning. Milica is the recipient of the 2015 IEEE/OES Distinguished Technical Achievement Award.

Milica Stojanovic graduated from the University of Belgrade, Serbia, in 1988, and received the M.S. and Ph.D. degrees in electrical engineering from Northeastern University in Boston, in 1991 and 1993. She was a Principal Scientist at the Massachusetts Institute of Technology, and in 2008 joined Northeastern University, where she is currently a Professor of electrical and computer engineering. She is also a Guest Investigator at the Woods Hole Oceanographic Institution. Milica’s research interests include digital communications theory, statistical signal processing and wireless networks, and their applications to underwater acoustic systems. She has made pioneering contributions to underwater acoustic communications, and her work has been widely cited. She is a Fellow of the IEEE, and serves as an Associate Editor for its Journal of Oceanic Engineering (and in the past for Transactions on Signal Processing and Transactions on Vehicular Technology). She also serves on the Advisory Board of the IEEE Communication Letters, and chairs the IEEE Ocean Engineering Society’s Technical Committee for Underwater Communication, Navigation and Positioning. Milica is the recipient of the 2015 IEEE/OES Distinguished Technical Achievement Award. Dr. Paul C. Hines was born and raised in Glace Bay, Cape Breton. From 1977-1981 he attended Dalhousie University, Halifax, Nova Scotia, graduating with a B.Sc. (Hon) in Engineering-Physics.

Dr. Paul C. Hines was born and raised in Glace Bay, Cape Breton. From 1977-1981 he attended Dalhousie University, Halifax, Nova Scotia, graduating with a B.Sc. (Hon) in Engineering-Physics.